Effortless POST requests with uncurl

Uncurl

Today I learned about a handy little project called uncurl.

No, this is not a tool to take the coolest sport out of the Olympics or de-Hermione Anne Hathaway. It takes the text of a curl command and converts it to Python code using the Requests library.

Julie Andrews hates volume??

Julie Andrews hates volume??

So for instance, giving uncurl a curl command customizing the User Agent for a request to getfedora.org will output the corresponding Python request, all ready to be pasted straight into your IDE. Throw a import requests at the top of your Python file and you have a fully functioning Python script.

> uncurl 'curl -H "User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:60.0)

Gecko/20100101 Firefox/60.0" https://getfedora.org/'

>

> requests.get("https://getfedora.org/",

headers={

"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:60.0) Gecko/20100101

Firefox/60.0"

},

cookies={},

auth=(),

)

Pairing with Developer Tools

So this is a nice little tool. But pair it with the option in your browser’s Developer Tools to copy a request as a curl command and the code practically writes itself (but in a ~fun~ way, not in a possibly-illegal-Github-copilot-y way). Let’s illustrate with an example.

Putting it Together

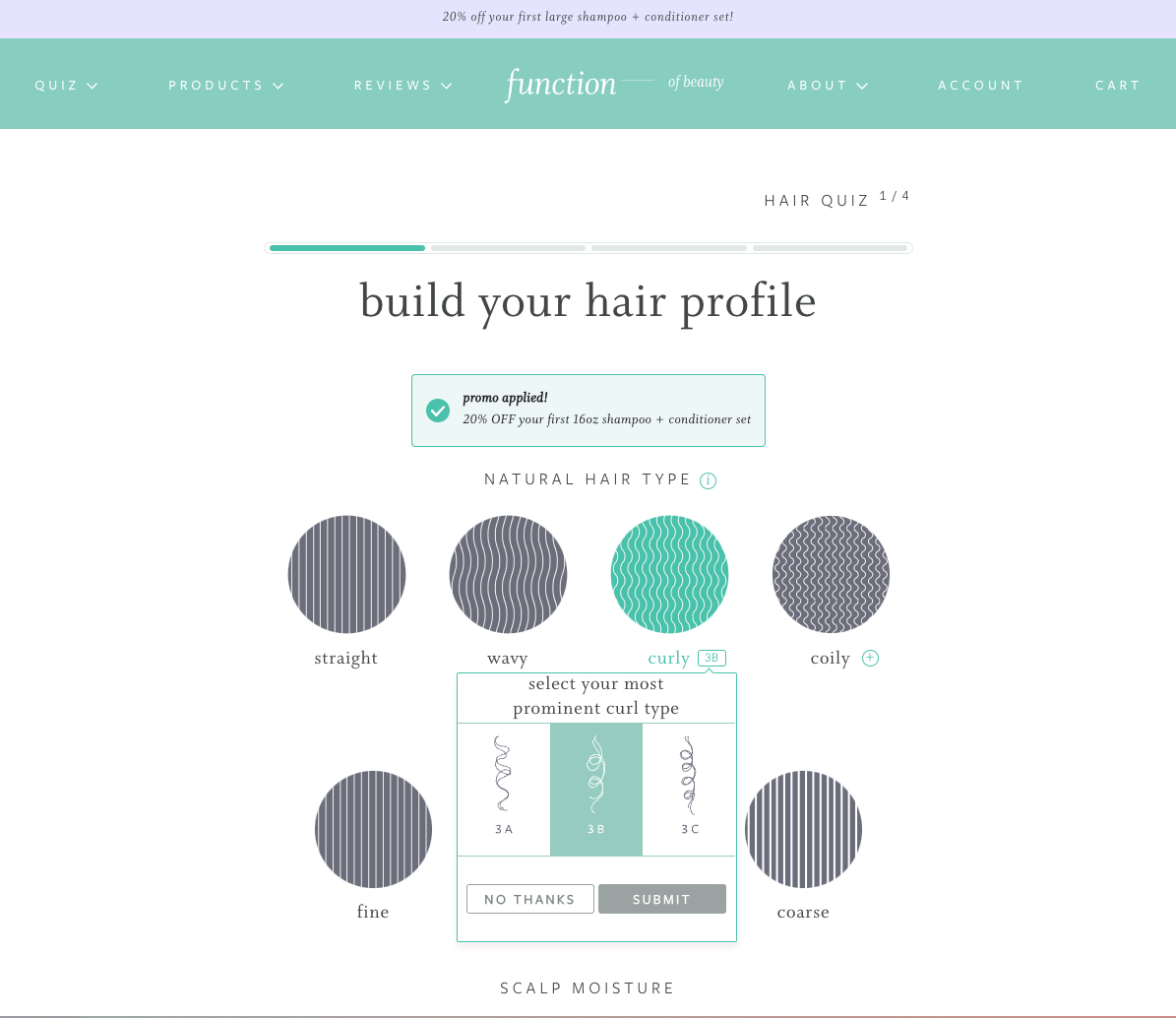

In solidarity with the curly-haired women maligned by the 2000s glow-up montage ✊, we’ll head over to functionofbeauty.com’s hair profile builder and prepare to lock in some moisture.

If this post is a mini-class, would that make it METHODofbeauty.com?

If this post is a mini-class, would that make it METHODofbeauty.com?

Let’s say the information we want to parse only appears when we select curl type “3B” and click “Submit”.

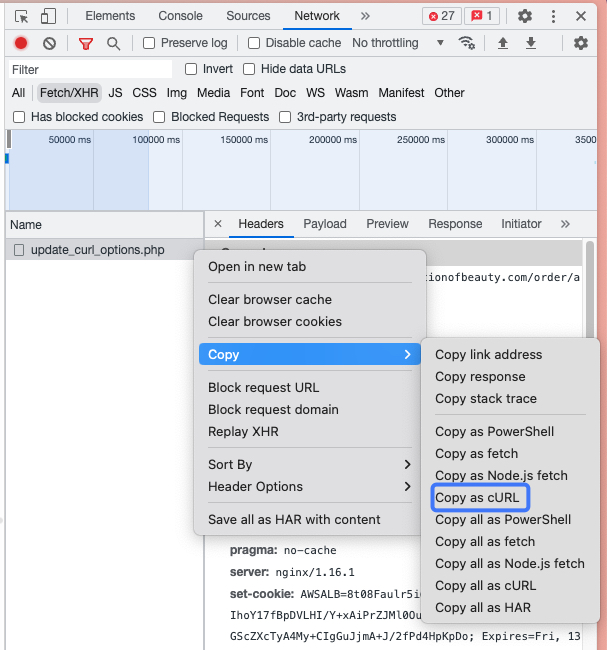

We’ll open up DevTools and navigate to the Network tab to see what request is made so we can replicate it in our Python code.

And there it is! But it’s not just a trivial little GET, but a POST. We actually have to send some form-encoded data, and we’ll get the full HTML we need back, ready for parsing. We could write our request manually in Python, but why bother? We have conditioning to get back to. All we need to do is use this handy little option to copy the request we triggered as curl:

Now we have our full curl command, locked and loaded in our clipboard!

curl 'https://www.functionofbeauty.com/order/ajax/update_curl_options.php' \

-H 'authority: www.functionofbeauty.com' \

-H 'accept: */*' \

-H 'accept-language: en-US,en;q=0.9' \

-H 'content-type: application/x-www-form-urlencoded; charset=UTF-8' \

-H 'dnt: 1' \

-H 'origin: https://www.functionofbeauty.com' \

-H 'referer: https://www.functionofbeauty.com/order/' \

-H 'sec-ch-ua: "Not?A_Brand";v="8", "Chromium";v="108"' \

-H 'sec-ch-ua-mobile: ?0' \

-H 'sec-ch-ua-platform: "macOS"' \

-H 'sec-fetch-dest: empty' \

-H 'sec-fetch-mode: cors' \

-H 'sec-fetch-site: same-origin' \

-H 'user-agent: Mozilla/5.0' \

-H 'x-requested-with: XMLHttpRequest' \

--data-raw 'curlTypeOptions%5B%5D=&hairType=curly&hairStructure=&moisture=&1=3&curl_type=3a' \

--compressed

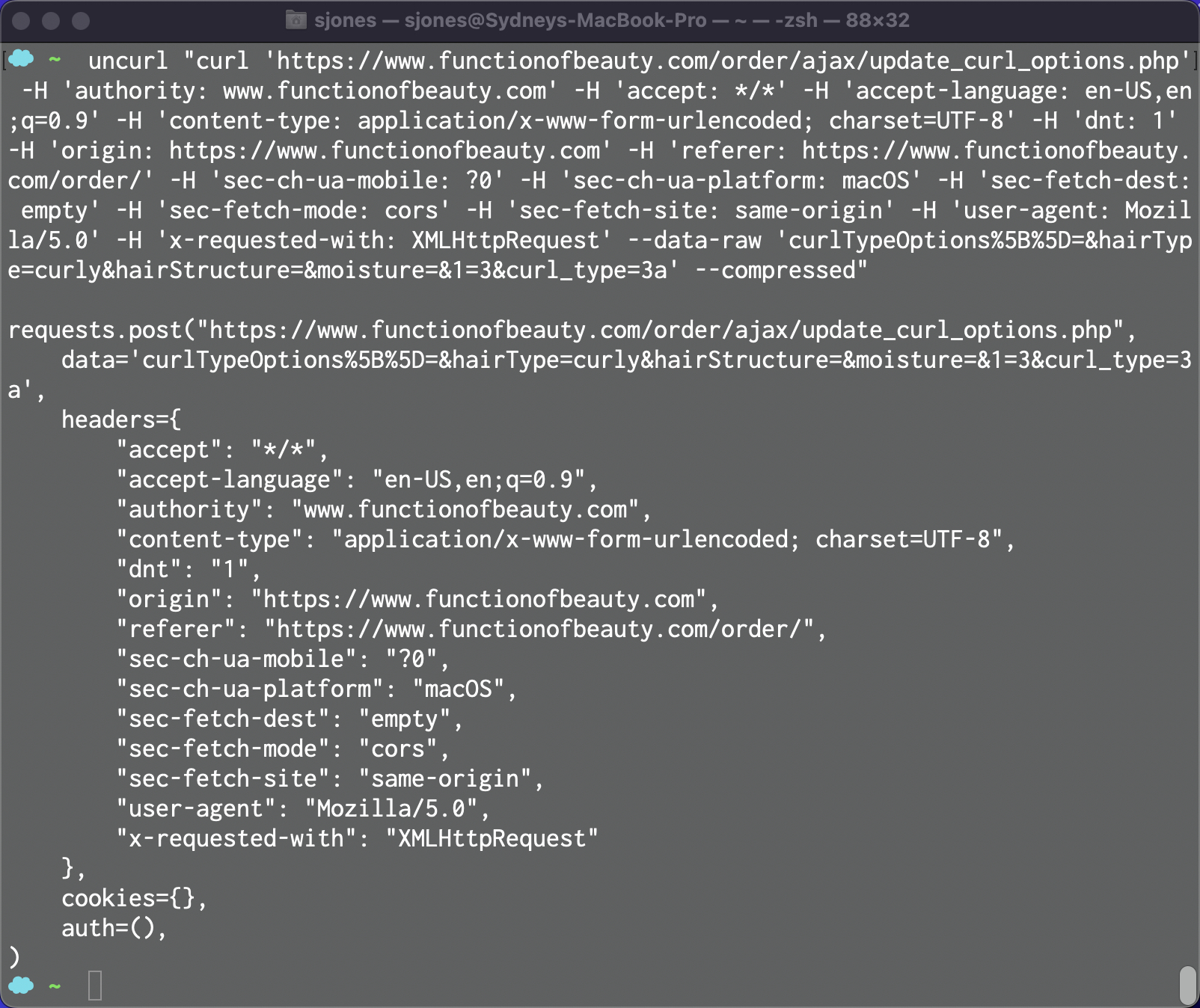

We’ll head over to the terminal, feed this whole command (in quotes) to uncurl, and watch the uncurl magic happen:

Copy and paste this output to a file, drop an import requests at the the top, pip install requests into your venv (you are using a per-project venv aren’t you 👀), and you have a functioning little Python script!

import requests

response = requests.post(

"https://www.functionofbeauty.com/order/ajax/"

"update_curl_options.php",

data="curlTypeOptions%5B%5D=&hairType=curly&hair"

"Structure=&moisture=&1=3&curl_type=3a",

headers={

"accept": "*/*",

"accept-language": "en-US,en;q=0.9",

"authority": "www.functionofbeauty.com",

"content-type": "application/x-www-form-urlencoded; "

"charset=UTF-8",

"dnt": "1",

"origin": "https://www.functionofbeauty.com",

"referer": "https://www.functionofbeauty.com/order/",

"sec-ch-ua-mobile": "?0",

"sec-fetch-dest": "empty",

"sec-fetch-mode": "cors",

"sec-fetch-site": "same-origin",

"user-agent": "Mozilla/5.0",

"x-requested-with": "XMLHttpRequest",

},

cookies={},

)

print(response.content.decode(encoding="utf8"))

Bonus: pipx

When you have tool like this, where you’re mostly going to be using it on the command line, it’s a great time to think about installing or running it with pipx. pipx is a tool that installs any package you want into an isolated venv and adds any entrypoints to your system PATH, so it’s always available to you. As a result, you A) avoid 🔥 dependency hell 🔥 and B) know that no matter what venv you have activated, you’ll still have your command-line tools at the ready.

- Install pipx

- On mac

brew install pipxandpipx ensurepath

- On mac

pipx run uncurl ...to just run uncurl one time, or if you want to keep it on your system to run any time,pipx install uncurl- After running

pipx install, you can rununcurllike normal from anywhere!

- After running

Bonus Bonus: HTTPX

One last quick win…for the most part, we can swap out the library httpx for requests with minimal compatibility issues (see here for a few exceptions). HTTPX has both sync and async APIs and a ton of helpful and Pythonic methods/attributes. For instance, notice how, here, I can easily get the content as a string and do some really intuitive error checking.

import httpx

response = httpx.post(

"https://www.functionofbeauty.com/order/ajax/"

"update_curl_options.php",

data="curlTypeOptions%5B%5D=&hairType=curly"

"&hairStructure=&moisture=&1=3&curl_type=3a",

headers={

"accept": "*/*",

"accept-language": "en-US,en;q=0.9",

"authority": "www.functionofbeauty.com",

"content-type": "application/x-www-form-urlencoded; "

"charset=UTF-8",

"dnt": "1",

"origin": "https://www.functionofbeauty.com",

"referer": "https://www.functionofbeauty.com/order/",

"sec-ch-ua-mobile": "?0",

"sec-fetch-dest": "empty",

"sec-fetch-mode": "cors",

"sec-fetch-site": "same-origin",

"user-agent": "Mozilla/5.0",

"x-requested-with": "XMLHttpRequest",

},

cookies={},

)

if response.is_success:

print(response.text)

else:

print(

f"Received bad status code {response.status_code}"

)

Plus, if you’re using this functionality to screen scrape, some sites block requests generated through the Requests library, but HTTPX, being less well-known, can sneak through.

I’d say that’s pretty fabulous.

Comments